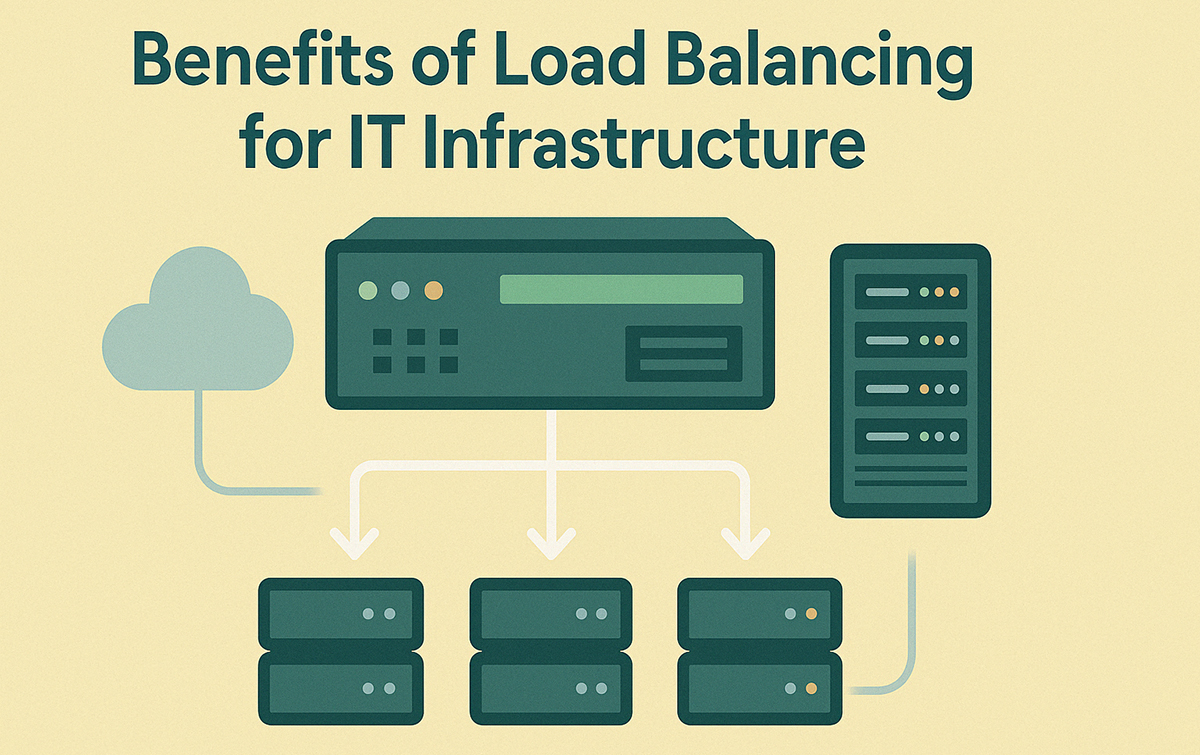

In the contemporary digital landscape, the expectation for uninterrupted service and seamless scalability is paramount. At the heart of meeting these demands lies a critical technology: load balancing. Often operating behind the scenes, load balancing is a fundamental pillar of robust IT infrastructure, orchestrating the distribution of workloads across multiple computing resources. Its significance extends beyond mere traffic management, underpinning system resilience, performance optimization, and enhanced security.

At its core, load balancing is the strategic distribution of processing workloads among a network of servers or resources. This prevents any single point of failure from becoming overwhelmed, ensuring optimal resource utilization and responsiveness. Nevertheless, the benefits of adopting a thoughtfully crafted load balancing strategy extend significantly into the core of IT operations.

Ensuring Unwavering Availability

Essentially, load balancing is the purposeful assignment of computational loads across a network of servers or resources. Load balancing is a cornerstone in achieving this objective. By continuously monitoring the health and status of individual servers, a load balancer can intelligently redirect incoming traffic away from any node experiencing performance degradation or outright failure.

Consider an application deployed across several servers. Should one of these servers encounter an issue, the load balancer seamlessly intervenes, rerouting user requests to the remaining healthy servers. This failover mechanism is transparent to the end-user, ensuring a consistent and uninterrupted experience. This level of fault tolerance is indispensable for mission-critical applications, online platforms, and any service where downtime can result in significant operational or financial repercussions.

Modern load balancing solutions extend beyond simple redirection. They often incorporate sophisticated features such as session persistence, which ensures that a user’s session remains on the same server for continuity, SSL offloading, which decrypts secure traffic to reduce the processing burden on backend servers, and content-based routing, which directs requests based on the specific content being requested. These advanced capabilities contribute to not only uninterrupted service but also an optimized and contextually relevant user experience.

Driving Efficient Scalability

Scalability is the ability of a system to handle increasing workloads without a decline in performance. Load balancing is instrumental in enabling both vertical and, more importantly, horizontal scaling strategies.

Vertical scaling, often referred to as “scaling up,” involves augmenting the resources of a single server (e.g., adding more CPU, RAM). While sometimes necessary, it has inherent limitations in terms of cost and potential downtime during upgrades.

The process of horizontal scaling, commonly known as “scaling out,” consists of incorporating more servers into the resource pool to manage heightened traffic levels. Load balancers are the key orchestrators of this approach. As demand grows, new servers can be seamlessly integrated into the load-balanced pool, and the balancer will automatically distribute incoming requests across these additional resources. This dynamic scaling ensures that performance remains consistent even during peak usage periods.

To effectively distribute traffic across multiple servers, load balancers employ various algorithms. Simple methods like Round Robin distribute requests sequentially. More intelligent algorithms, such as Least Connections, direct traffic to the server with the fewest active connections, optimizing resource utilization for long-lived sessions. By utilizing IP Hashing, requests from a single client IP address are consistently assigned to the same server, a practice that is vital for maintaining session state in particular applications. For environments with heterogeneous server capabilities, Weighted Round Robin and Weighted Least Connections allow administrators to assign weights to servers based on their capacity.

Fortifying Security Posture

Beyond availability and scalability, load balancing plays a significant role in enhancing the security of IT infrastructure. Many contemporary load balancers are equipped with integrated security features that act as a first line of defense against various threats.

One critical security benefit is protection against Distributed Denial-of-Service (DDoS) attacks. Load balancers can identify and mitigate abnormal traffic spikes characteristic of DDoS attacks by filtering or redirecting malicious packets before they reach backend servers.

SSL offloading, while primarily a performance optimization, also contributes to security by centralizing certificate management and reducing the attack surface on individual web servers. Furthermore, some advanced load balancers offer Web Application Firewall (WAF) integration, enabling deep packet inspection to identify and block common web application vulnerabilities such as SQL injection and cross-site scripting. By embedding security controls at the entry point of the system, load balancers contribute to a more robust and resilient security posture.

Navigating the Landscape of Load Balancer Types

The selection of the appropriate type of load balancer is crucial for optimizing performance and aligning with specific architectural requirements. Different types of load balancers operate at various layers of the Open Systems Interconnection (OSI) model.

Dedicated physical appliances known as hardware load balancers provide superior performance and high throughput capabilities. While often associated with higher costs, they are prevalent in environments demanding stringent security and high traffic volumes.

Software load balancers, such as open-source solutions like HAProxy and NGINX, offer greater flexibility and cost-effectiveness. Deployed on commodity hardware or virtual machines, they are well-suited for dynamic cloud environments.

Cloud-native load balancers, offered by major cloud providers, seamlessly integrate with other cloud services, providing auto-scaling, multi-protocol support, and eliminating the need for hardware management.

Global Server Load Balancers (GSLBs) distribute traffic across geographically dispersed data centers, enhancing disaster recovery capabilities, improving performance for global users, and enabling localized service delivery.

Layer 4 vs. Layer 7: Understanding the Distinction

Load balancing at Layer 4 (Transport Layer) and Layer 7 (Application Layer) is characterized by a significant distinction. Layer 4 load balancers operate at the TCP/UDP level, making routing decisions based on IP addresses and port numbers. They demonstrate exceptional efficiency and are well-suited for traffic that does not utilize HTTP protocols.

In contrast, Layer 7 load balancers possess application awareness. They can inspect the content of requests, including HTTP headers, cookies, and URLs, enabling more intelligent routing decisions based on application-specific attributes. This is particularly beneficial for web applications, microservices architectures, and content-based routing scenarios.